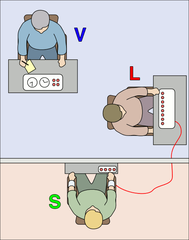

Julie A. Woodzicka (Washington and Lee University) and Marianne LaFrance (Yale) report an experiment reminiscent of Milgram’s famous studies of obedience to authority. Reminiscent both because it highlights the gap between how we imagine we’ll respond under pressure and how we actually do respond, and because it’s hard to imagine an ethics review board allowing it.

Julie A. Woodzicka (Washington and Lee University) and Marianne LaFrance (Yale) report an experiment reminiscent of Milgram’s famous studies of obedience to authority. Reminiscent both because it highlights the gap between how we imagine we’ll respond under pressure and how we actually do respond, and because it’s hard to imagine an ethics review board allowing it.

The study, reported in the Journal of Social Issues in 2001, involved the following (in their own words):

we devised a job interview in which a male interviewer asked female job applicants sexually harassing questions interspersed with more typical questions asked in such contexts.

The three sexually harassing questions were (1) Do you have a boyfriend? (2) Do people find you desirable? and (3) Do you think it is important for women to wear bras to work?

Participants, all women, average age 22, did not know they were in an experiment and were recruited through posters and newspaper adverts for a research assistant position.

The results illuminated what targets of harassment do not do. First, no one refused to answer: Interviewees answered every question irrespective of whether it was harassing or nonharassing. Second, among those asked the harassing questions, few responded with any form of confrontation or repudiation. Nonetheless, the responses revealed a variety of ways that respondents attempted to circumvent the situation posed by harassing questions.

Just as with the Milgram experiment, these results contrast with how participants from a companion study imagined they would respond when the scenario was described to them:

The majority (62%) anticipated that they would either ask the interviewer why he had asked the question or tell him that it was inappropriate. Further, over one quarter of the participants (28%) indicated that they would take more drastic measures by either leaving the interview or rudely confronting the interviewer. Notably, a large number of respondents (68%) indicated that they would refuse to answer at least one of the three harassing questions.

Part of the difference, the researchers argue, is that women imagining the harassing situation over-estimate the anger they will feel. When confronted with actual harassment, fear replaces anger, they claim. Women asked the harassing questions reported significantly higher rates of fear than women asked the merely surprising questions. Coding of facial expressions during the (secretly videoed) interviews revealed that the harassed women also smiled more – fake (non-Duchenne) smiles, presumably aimed at appeasing a harasser that they felt afraid of.

The research report doesn’t indicate what, if any, ethical review process the experiment was subject to.

Obviously it is an important topic, with disturbing and plausible findings. The researchers note that courts have previously interpreted inaction following harassment as indicative of some level of consent. But, despite the real-world relevance, is it a topic that is it important enough to justify employing a man to sexually harass unsuspecting women?

Reference: Woodzicka, J. A., & LaFrance, M. (2001). Real versus imagined gender harassment. Journal of Social Issues, 57(1), 15-30.

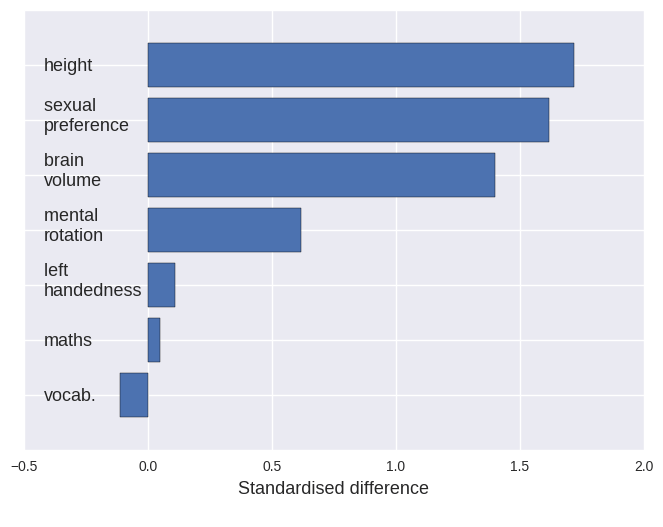

Previously: a series of Gender Brain Blogging

Much more previously: an essay I wrote arguing that moral failures are often defined by failures of imagination, not of reason: The Narrative Escape

The brilliant developmental neuropsychologist Annette Karmiloff-Smith has

The brilliant developmental neuropsychologist Annette Karmiloff-Smith has