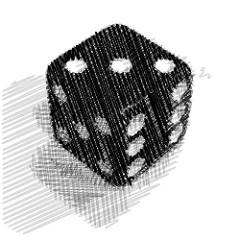

The Devil looks you in the eyes and offers you a bet. Pick a number and if you successfully guess the total he’ll roll on two dice you get to keep your soul. If any other number comes up, you go to burn in eternal hellfire.

The Devil looks you in the eyes and offers you a bet. Pick a number and if you successfully guess the total he’ll roll on two dice you get to keep your soul. If any other number comes up, you go to burn in eternal hellfire.

You call “7” and the Devil rolls the dice.

A two and a four, so the total is 6 — that’s bad news.

But let’s not dwell on the incandescent pain of your infinite and inescapable future, let’s think about your choice immediately before the dice were rolled.

Did you make a mistake? Was choosing “7” an error?

In one sense, obviously yes. You should have chosen 6.

But in another important sense you made the right choice. There are more combinations of dice outcomes that add to 7 than to any other number. The chances of winning if you bet 7 are higher than for any other single number.

The distinction is between a particular choice which happens to be wrong, and a choice strategy which is actually as good as you can do in the circumstances. If we replace the Devil’s Wager with the situations the world presents you, and your choice of number with your actions in response, then we have a handle on what psychologists mean when they talk about “cognitive error” or “bias”.

In psychology, the interesting errors are not decisions that just happen to turn out wrong. The interesting errors are decisions which people systematically get wrong, and get wrong in a particular way. As well as being predictable, these errors are interesting because they must be happening for a reason.

If you met a group of people who always bet “6” when gambling with the Devil, you’d be an incurious person if you assumed they were simply idiots. That judgement doesn’t lead anywhere. Instead, you’d want to find out what they believe that makes them think that’s the right choice strategy. Similarly, when psychologists find that people will pay more to keep something than they’d pay to obtain it or are influenced by irrelevant information in the judgements of risk, there’s no profit to labelling this “irrationality” and leaving it at that. The interesting question is why these choices seem common to so many people. What is it about our minds that disposes us to make these same errors, to have in common the same choice strategies?

You can get traction on the shape of possible answers from the Devil’s Wager example. In this scenario, why would you bet “6” rather than “7”? Here are three possible general reasons, and their explanations in the terms of the Devil’s Wager, and also a real example.

1. Strategy is optimised for a different environment

If you expected the Devil to role a single loaded die, rather than a fair pair of dice, then calling “6” would be the best strategy, rather than a sub-optimal one.

Analogously, you can understand a psychological bias by understanding which environment is it intended to match. If I love sugary foods so much it makes me fat, part of the explanation may be that my sugar cravings evolved at a point in human history when starvation was a bigger risk than obesity.

2. Strategy is designed for a bundle of choices

If you know you’ll only get to pick one number to cover multiple bets, your best strategy is to pick a number which works best over all bets. So if the Devil is going to give you best of ten, and most of the time he’ll roll a single loaded die, and only some times roll two fair dice, then “6” will give you the best total score, even though it is less likely to win for the two-fair-dice wager.

In general, what looks like a poor choice may be the result of strategy which treats a class of decisions as the same, and produces a good answer for that whole set. It is premature to call our decision making irrational if we look at a single choice, which is the focus of the psychologist’s experiment, and not the related set of choice of which it is part.

An example from the literature may be the Mere Exposure Effect, where we favour something we’ve seen before merely because we’ve seen it before. In experiments, this preference looks truly arbitrary, because the experiment decided which stimuli to expose us to and which to withhold, but in everyday life our familiarity with things tracks important variables such as how common, safe or sought out things are. The Mere Exposure Effect may result from a feature of our minds that assumes, all other things being equal, that familiar things are preferable, and that’s probably a good general strategy.

3. Strategy uses a different cost/benefit analysis

Obviously, we’re assuming everyone wants to save their soul and avoid damnation. If you felt like you didn’t deserve heaven, harps and angel wings, or that hellfire sounds comfortably warm, then you might avoid making the bet-winning optimal choice.

By extension, we should only call a choice irrational or suboptimal if we know what people are trying to optimise. For example, it looks like people systematically under-explore new ways of doing things when learning skills. Is this reliance on habit, similar to confirmation bias when exploring competing hypotheses, irrational? Well, in the sense that it slows your learning down, it isn’t optimal, but if it exists because exploration carries a risk (you might get the action catastrophically wrong, you might hurt yourself), or that the important thing is to minimise the cost of acting (and habitual movements require less energy), then it may in fact be better than reckless exploration.

So if we see a perplexing behaviour, we might reach for one of these explanations to explain it: The behaviour is right for a different environment, a wider set of choices, or a different cost/benefit analysis. Only when we are confident that we understand the environment (either evolutionary, or of training) which drives the behaviour, and the general class of choices of which it is part, and that we know which cost-benefit function the people making the choices are using, should we confidently say a choice is an error. Even then it is pretty unprofitable to call such behaviour irrational – we’d want to know why people make the error. Are they unable to calculate the right response? Mis-perceiving the situation?

A seemingly irrational behaviour is a good place to start investigating the psychology of decision making, but labelling behaviour irrational is a terrible place to stop. The topic really starts to get interesting when we start to ask why particular behaviours exist, and try to understand their rationality.

Previously/elsewhere:

Irrational? Decisions and decision making in context

My ebook: For argument’s sake: evidence that reason can change minds, which explores our over-enthusiasm for evidence that we’re irrational.

The backfire effect is when correcting misinformation hardens, rather than corrects, someone’s mistaken belief. It’s a relative of so called

The backfire effect is when correcting misinformation hardens, rather than corrects, someone’s mistaken belief. It’s a relative of so called  A review called ‘

A review called ‘