You feel somebody is looking at you, but you don’t know why. The explanation lies in some intriguing neuroscience and the study of a strange form of brain injury.

Something makes you turn and see someone watching you. Perhaps on a busy train, or at night, or when you’re strolling through the park. How did you know you were being watched? It can feel like an intuition which is separate from your senses, but really it demonstrates that your senses – particularly vision – can work in mysterious ways.

Intuitively, many of us might imagine that when you look at something with your eyes, signals travel to your visual cortex and then you have the conscious experience of seeing it, but the reality is far weirder.

Once information leaves our eyes it travels to at least 10 distinct brain areas, each with their own specialised functions. Many of us have heard of the visual cortex, a large region at the back of the brain which gets most attention from neuroscientists. The visual cortex supports our conscious vision, processing colour and fine detail to help produce the rich impression of the world we enjoy. But other parts of our brain are also processing different pieces of information, and these can be working away even when we don’t – or can’t – consciously perceive something.

The survivors of neural injury can cast some light on these mechanisms. When an accident damages the visual cortex, your vision is affected. If you lose all of your visual cortex you will lose all conscious vision, becoming what neurologists call ‘cortically blind’. But, unlike if you lose your eyes, cortically blind is only mostly blind – the non-cortical visual areas can still operate. Although you can’t have the subjective impression of seeing anything without a visual cortex, you can respond to things captured by your eyes that are processed by these other brain areas.

In 1974 a researcher called Larry Weiskrantz coined the term ‘blindsight’ for the phenomenon of patients who were still able to respond to visual stimuli despite losing all conscious vision due to destruction of the visual cortex. Patients like this can’t read or watch films or anything requiring processing of detail, but they are – if asked to guess – able to locate bright lights in front of them better than mere chance. Although they don’t feel like they can see anything, their ‘guesses’ have a surprising accuracy. Other visual brain areas are able to detect the light and provide information on the location, despite the lack of a visual cortex. Other studies show that people with this condition can detect emotions on faces and looming movements.

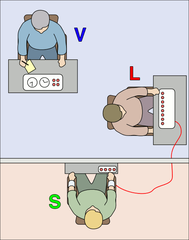

More recently, a dramatic study with a blindsight patient has shown how we might be able feel that we are being looked at, without even consciously seeing the watchers’ face. Alan J Pegna at Geneva University Hospital, Switzerland, and team worked with a man called TD (patients are always referred to by initials only in scientific studies, to preserve anonymity). TD is a doctor who suffered a stroke which destroyed his visual cortex, leaving him cortically blind.

People with this condition are rare, so TD has taken part in a string of studies to investigate exactly what someone can and can’t do without a visual cortex. The study involved looking at pictures of faces which had their eyes directed forward, looking directly at the viewer, or which had their eyes averted to the side, looking away from the viewer. TD did this task in an fMRI scanner which measured brain activity during the task, and also tried to guess which kind of face he was seeing. Obviously for anyone with normal vision, this task would be trivial – you would have a clear conscious visual impression of the face you were looking at at any one time, but recall that TD has no conscious visual impression. He feels blind.

The scanning results showed that our brains can be sensitive to what our conscious awareness isn’t. An area called the amygdala, thought to be responsible for processing emotions and information about faces, was more active when TD was looking at the faces with direct, rather than averted, gaze. When TD was being watched, his amygdala responded, even though he didn’t know it. (Interestingly, TD’s guesses as to where he was being watched weren’t above chance, and the researchers put this down to his reluctance to guess.)

Cortical, conscious vision, is still king. If you want to recognise individuals, watch films or read articles like this you are relying on your visual cortex. But research like this shows that certain functions are simpler and maybe more fundamental to survival, and exist separately from our conscious visual awareness.

Specifically, this study showed that we can detect that people are looking at us within our field of view – perhaps in the corner of our eye – even if we haven’t consciously noticed. It shows the brain basis for that subtle feeling that tells us we are being watched.

So when you’re walking that dark road and turn and notice someone standing there, or look up on the train to see someone staring at you, it may be your nonconscious visual system monitoring your environment while you’re conscious attention was on something else. It may not be supernatural, but it certainly shows the brain works in mysterious ways.

This is my BBC Future column from last week. The original is here.

We’re happy to announce the re-launch of our project ‘Cyberselves: How Immersive Technologies Will Impact Our Future Selves’. Straight out of Sheffield Robotics, the project aims to explore the effects of technology like robot avatars, virtual reality, AI servants and other tech which alters your perception or ability to act. We’re interested in work, play and how our sense of ourselves and our bodies is going to change as this technology becomes more and more widespread.

We’re happy to announce the re-launch of our project ‘Cyberselves: How Immersive Technologies Will Impact Our Future Selves’. Straight out of Sheffield Robotics, the project aims to explore the effects of technology like robot avatars, virtual reality, AI servants and other tech which alters your perception or ability to act. We’re interested in work, play and how our sense of ourselves and our bodies is going to change as this technology becomes more and more widespread.

This

This  Since 2002, hundreds of thousands of people around the world have logged onto a website run by Harvard University called

Since 2002, hundreds of thousands of people around the world have logged onto a website run by Harvard University called

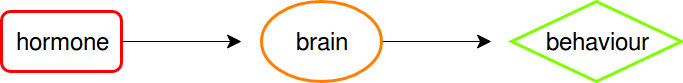

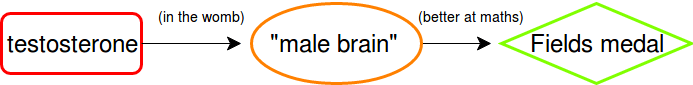

This account may appear, at first, compelling, perhaps because of its simplicity. But Fine presents us with an antidote for this initial intuition, in the form of the neurodevelopmental story of a the spinal nucleus of the bulbocavernosus (SNB), a subcortical brain area which controls muscles at the base of the penis.

This account may appear, at first, compelling, perhaps because of its simplicity. But Fine presents us with an antidote for this initial intuition, in the form of the neurodevelopmental story of a the spinal nucleus of the bulbocavernosus (SNB), a subcortical brain area which controls muscles at the base of the penis.

Julie A. Woodzicka

Julie A. Woodzicka